Keynsham Enterprises Ltd

1 | History

Keynsham Enterprises is a UK-based S/W company. Founded in early 2017, the company is focusing large distributed data handling applied for fleet and swarm management. Experience from Geostationary Communication Satellite (GEO COMS) operators and large scale data center management is the main asset of the company . Keynsham Enterprises aims to launch a swarm coordination demonstration in 2021, and permanent fleet management operations in 2022 or 2023.

Growing out of a NASA challenge at Columbia University, Keynsham Enterprises was founded in 2016 by aerospace engineers and telecom entrepreneurs to bring new-space based solutions to satellite operators around the globe.

We have since gained dynamic international support from ESA/Innovate UK, investors connected to the Telecom industry as well as established satellite manufacturers in Canada and Europe through infrastructure, technical expertise and funding.

Based in the UK, we recruited experienced space talent, and are collaborating with some of the best experts in the industry to enable GEO satellite life extension options for global telecommunications companies, at a fraction of market costs.

⦁ Incorporated in 2016 by a technical founding team from telecom analytics executives.

⦁ Developed R&D relationship with Salford Space Rendezvous Laboratory to develop a target-agnostic Robot Controllers

⦁ Assembled low-cost, high-turnaround supply chain to use in new swarm drone technologies

⦁ Hired talented executive team with previous experience at SSL, DARPA, SES, and R&D team from MIT, Draper, and IIT

2 | Services & Technology

We are building core competency in two areas:

⦁ Autonomous navigation.

A plug and play module which allows assets to track targets using several optical sensors and a computer, and which ultimately will be utilized for high precision formation flying and rendezvous operations between flying platforms.

A revolution in flying platform design will move us towards modular, fractionated and other systems which will allow assets to be rapidly upgradeable platforms instead of customized, single mission oriented relics of the past. Core competency in navigation will be crucial for these platforms to thrive.

3 | Long Term Plans

The ultimate goal of Keynsham Enterprises is to catalyse the development of a self-sustaining flying drone economy.

The biggest issue with space today is the massive capital requirement, which stops startups and organisations from accessing the industry and developing novel products and services. The game is limited to large companies and governments with little appetite to try new things.

The current players tend to develop proprietary, and very customized, systems. This keeps other companies from developing in-space capabilities and services that could build on top of what already exists.

This won’t last forever, innovation led high growth industries have demonstrated time and again this sort of ring-fencing doesn’t last. Take the internet: it doesn’t make sense for everyone to own computers to store data and develop applications. We rely on data centres, search engines and other complex services to transform individual systems into a self-sustaining, interconnected architecture where personal devices become just the interface.

The distributed and modular systems our technology enables will allow us to move hardware and cargo between systems, fly constellations of different types of specialized drones, continuously develop this infrastructure, and enable more complex services to be built. That’s the Keynsham Enterprises endgame and a boon for those in today’s drone race.

Keynsham Enterprises is launching a multiple demonstration mission in 2021 and can provide swarm coordination services on a case-to-case basis. Keynsham Enterprises works in collaboration with its customers and the relevant regulators.

We are offering the following frequencies:

Uplink:

⦁ Ku band: 12.5-14.8 GHz

Downlink:

⦁ 10.7-12.75 GHz

4 | Optical Tracking, Guidance and Control

A core IP in self driving drones

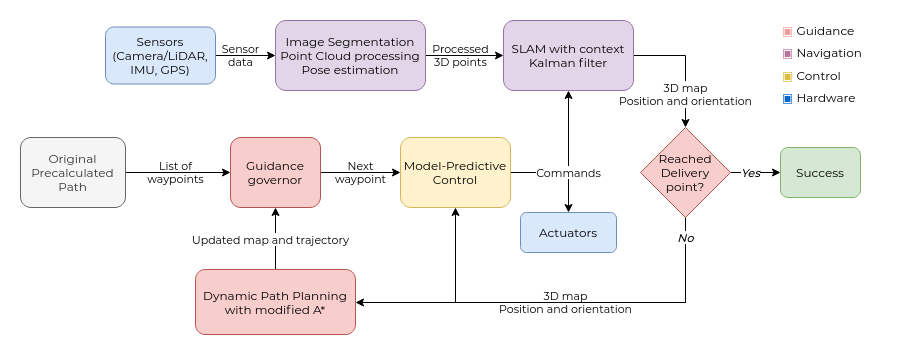

Our core IP is in the domain of Guidance, Navigation and Control. We build custom software systems which allow our drones to track other flying objects, translate that information into relative trajectories to navigate to them, and find the pose of these satellites to be able to execute high precision maneuvers.

5 | Computer Vision system for autonomous navigation

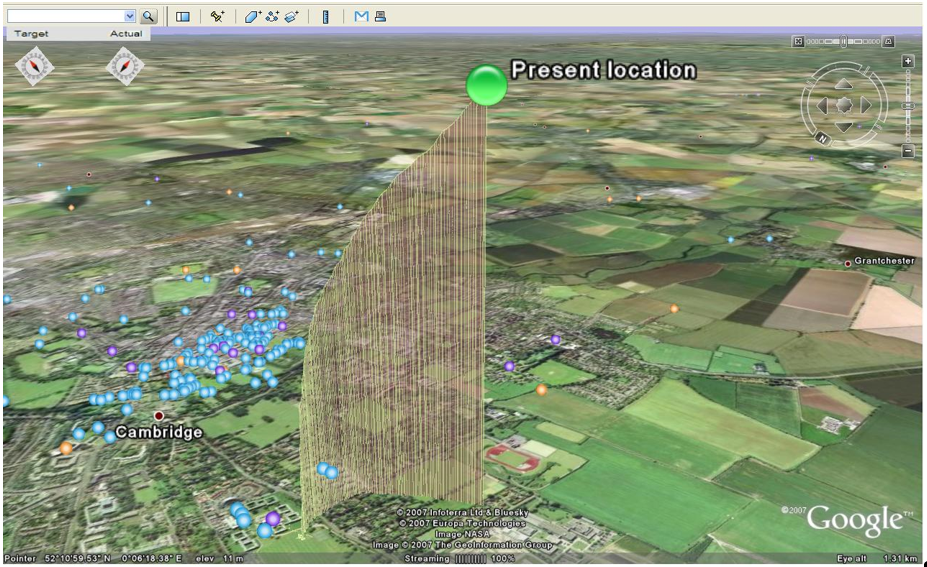

Keynsham Enterprises technology enables the autonomous navigation of drones and other unmanned vehicles. Similar techniques enable land vehicles to achieve autonomous delivery. The GPS waypoints are not precise enough for urban navigation. Therefore, the use of computer vision techniques is required. In order to achieve a high level of autonomy from the drone to the point of delivery, the system proposed utilizes 2 distinct techniques: semantic segmentation of 2D images and 3D point cloud processing.

Semantic segmentation using DeeplabV3+ (Yurtkulu, et al., 2019) architecture allows for cost effective spatial awareness. Our implementation of the detector network differs from the original in ways that make it better suited for embedded systems. If used in tandem with semantic segmentation it can assign labels for all the points in the reconstructed 3D map and assess the relative position of points-of-interest (gates, stairs, patios), stationary obstacles (trees, buildings, fences) and moving obstacles (bicyclists, pedestrians). Using this information, it can autonomously navigate to the delivery point without harm.

With the data supplied from the computer vision navigation, the guidance and control modules can update the 3D maps which are used for trajectory planning. The navigable regions can be flagged by the steepness of the terrain, the obstacles present and other factors. Given the ability of the robots to scale up stairs and navigate uneven terrain, the flags do not suggest impassable regions but regions of different weights. These weights are an added heuristic in a modified version of the A* (A star) path search algorithm (Hart, et al., 1968). This way, the robots can react to changes in the environment and dynamically plan a new trajectory.

Fig. 1: Example images from the Cambridge-driving Labeled Video Database (CamVid) labeled using our deep learning model.

Fig. 2: DeepV2D used to obtain 3D depth maps from sequences of 5 images from the KITTI dataset (Top: scene 1, Bottom: scene 2).

Fig. 4: The high-level diagram of the navigation software proposed by Keynsham Enterprises.

Contact

UK 71-75 Shelton Str. Covent Garden, London, United Kingdom

UK WC2H 9JQ

www.keynsham.eu

info@keynsham.eu